Performing “Object Based Nearest Neighbor Classification” & Its Comparison With Pixel-Based Classification

Pixel-Based Classification is

done on a per pixel level, using only the spectral information available for

that individual pixel (i.e. values of pixels within the locality are ignored).

Object-Based Classification is

done on group of pixels, taking into account the spatial properties

of each pixel as they relate to each other. Image might be partitioned

into n segments of equal size, and each segment would then be given a class

(i.e. contains object / does not contain object).

Currently the prospects of a new classification concept, Object-Based Classification, are being

explored. Recent studies have proven the superiority of the new concept

over traditional classifiers. The new concept’s basic principle is to make use

of important information (shape, texture and contextual information) that is

present only in meaningful image objects and their mutual relationships.

|

| Image Source: Pix Bay |

Methodology

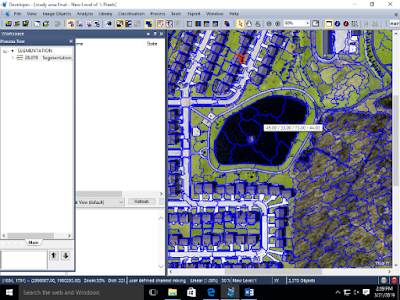

1. Open the eCognition software.

2. Click on “create new project”

option.

3. Import the “Study area image”

from the workspace.

4. Click on the layers and rename

each layer. Layer 1 as blue, layer 2 as green, and 3rd as red and 4th NIR etc...

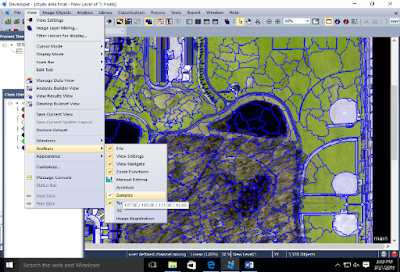

5. Go to “Process” option on the

top toolbar and open “ process tree” All the operations in ecognition are

executed through the “ process tree”

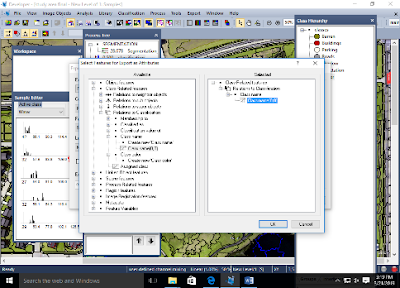

6. Right click in “process tree”

window and open the option “Append new”. Uncheck the “Automatic option” and

type the name “Segmentation” and click OK.

7. The parent class

“segmentation” has been added. Now click the ‘segmentation” option and select

the “Insert child” option to add sub classes.

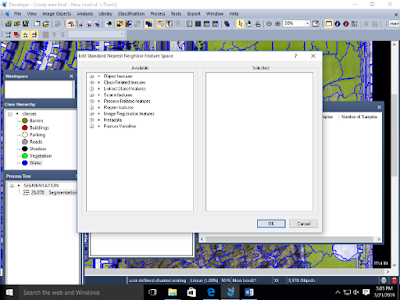

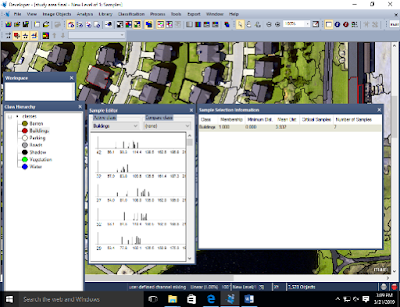

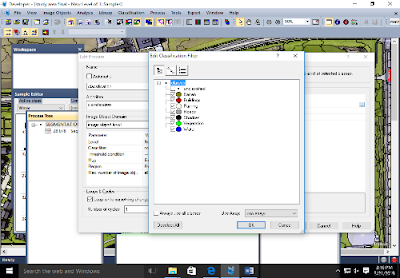

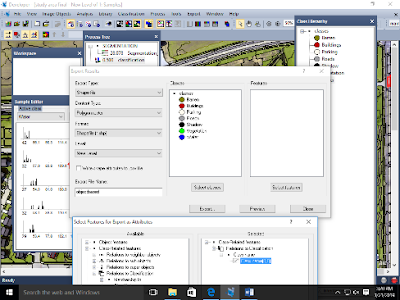

8. Click the first segmentation

algorithm” Multi Resolution Segmentation”

and select the execute option.

9. Execute next “Multiresolution, Spectral difference”

Note that in executing Multi

Resolution segmentation Scale 70 value -average size of objects Shape (0.4) weight

-Geometric form of the objects Compactness (0.6) Value- The higher the value,

the more compact image objects maybe.

Screenshots are at end of blog

Conclusion and Comparison of "Object Based Nearest Neighbor Classification" & "Pixel-Based Classification".

Pixel Based Classification

|

Object Based Nearest Neighbor Classification

| |

Classification is done on a per pixel level, using only the spectral information available for that individual pixel (i.e. values of pixels within the locality are ignored.

|

Classification is done on a localized group of pixels, taking into account the spatial properties of each pixel as they relate to each other.

| |

In this sense each pixel would represent a training example for a classification algorithm, and this training example would be in the form of an n-dimensional vector, where n was the number of spectral bands in the image data

|

In this sense a training example for a classification algorithm would consist of a group of pixels, and the trained classification algorithm would accordingly output a class prediction for pixels on a group basis

| |

Accordingly the trained classification algorithm would output a class prediction for each individual pixel in an image

|

For a crude example, an image might be partitioned into n segments of equal size, and each segment would then be given a class (i.e. contains object / does not contain object

|

Traditional per-pixel approaches were not very effective. This was proven by the classification of the entire image using the most widely used classifier rule and spectra of the selected land cover classes generated from the image.

Nonetheless, the object-based classification system is a better approach than the traditional per-pixel classifiers in urban mapping using high-resolution imagery.

References

- K. Leukert, A. Darwish and W. Reinhardt, “Urban Land-cover Classification: An Object-based Perspective,” 2nd Joint Workshop on Remote Sensing and Data Fusion over Urban Areas, Berlin, May 2003.

- P. Mather, Computer processing of remotely-sensed images, Chichester: Wiley, 1999. [3] B. Jähne, Digitale Bildverarbeitung, Berlin: Springer, 1993.

- R. Haralick, L. Shapiro, Computer and robot vision, Vol. 1, Reading, Mass.: Addison-Wesley, 1992.

- Definiens imaging, eCognition user guide [Online]. Available: http://www.definiens-imaging.com/documents/index.htm, April 2003.

- NIMA, Geospatial Standards and Specifications [Online]. Available: http://164.214.2.59/publications/specs/index.html, April 2003.

- Lillesand, T., Kiefer, R., “Remote Sensing and Image Interpretation”, New York, USA: John Wiley & Sons, 2000.

Comments

Post a Comment